Butterfly effects in perceptual development: adaptive initial degradations

Human perceptual development commences with initially limited sensory capabilities, which mature gradually into robust proficiencies. Studies of late-sighted children who skip these initial stages of development as well as simulations with deep neural networks indicate that these early degradations serve as scaffolds for development, rather than constituting hurdles. In contrast, dispensing with these degradations compromises later development. These findings inform our understanding of typical and atypical development and provide inspiration for the design of more robust computational model systems.

Potential downside of high initial visual acuity (Vogelsang et al., PNAS, 2018)

[Paper]

[Response]

[Related book chapter]

[MIT News video]

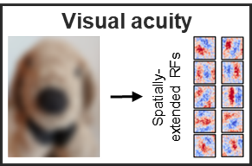

Newborns are born with initially poor visual acuity. Data from late-sighted individuals and computational simulations suggest that such early acuity limitations are adaptive. They may help instantiate extended spatial integration mechanisms, which are key for robust performance in visual tasks like face recognition.

Impact of early visual experience on later usage of color cues (Vogelsang et al., Science, 2024)

[Paper]

[MIT News story]

[Science Podcast]

[The Hindu article]

[Yale Scientific feature]

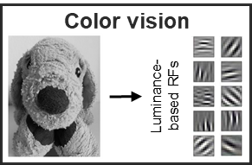

Akin to the case of visual acuity, newborns initially exhibit poor color sensitivity. Experiments with late-sighted children and computational models demonstrate that these initial degradations may have functional significance and could underlie our remarkable resilience to color variations encountered later in life.

Potential role of developmental experience in the emergence of the parvo-magno distinction

(Vogelsang et al., Communications Biology, 2025)

[Paper]

[MIT News story]

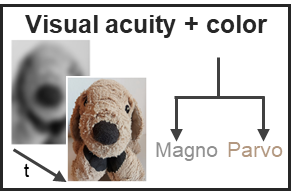

Considering visual acuity and color sensitivity together, training deep networks with such joint progression suggests that the temporal confluence in spatial frequency and color sensitivity development also significantly shapes response properties characteristic of the division of parvo- and magno systems.

Prenatal auditory experience and its sequelae (Vogelsang et al., Developmental Science, 2022)

[Paper]

[MIT News story]

A human fetus is able to register environmental sounds. This in-utero experience, however, is restricted to exclusively low-frequency components of auditory signals. Computational simulations suggest that such inputs yield temporally extended receptive fields, which are critical for tasks such as emotion recognition.

Butterfly effects in perceptual development: A review of the ‘adaptive initial degradation’ hypothesis

(Vogelsang et al., Developmental Review, 2024)

[Paper]

Here, we review the ‘adaptive initial degradation’ hypothesis across visual and auditory domains. We propose that early perceptual limitations may be akin to the flapping of a butterfly’s wings, setting up small eddies that manifest in due time as significant salutary effects on later perceptual skills.